Agent Helper Case Study

Reducing clicks, increasing adoption, and integrating seamlessly into agent workflows.

Overview

Agent Helper is an AI-powered support tool used by enterprise agents to resolve cases faster.

The initial compact design shipped to all customers as a default. While lightweight, it struggled with adoption:

-

Agents resisted using it, seeing it as “extra work.”

-

Core actions like escalation and summarization took too many clicks.

-

The product felt disconnected from their natural workflow.

My Role: Product Design Lead — owned research, UX/UI, collaborated closely with Product Management & Engineering.

Problem

-

Adoption < expected benchmarks despite rollout.

-

Too many clicks to access case details and actions.

-

Agent resistance: new tools had to prove their value quickly.

Approach

To uncover why adoption was low, I analyzed click-path data and ran agent feedback sessions.

Findings:

-

Agents wanted glanceable summaries (case + environment + sentiment) up front.

-

They expected quick actions (escalate, summarize, respond) within one step.

-

Technical limits prevented live auto-refresh, creating lag.

Collaboration

-

Product → Helped prioritize what fields to surface in the summary.

-

Engineering → Flagged API refresh issues; together we shifted to a pull-to-refresh with batch updates design.

-

CSM Team → Shared customer feedback around resistance, validating design direction.

Solution (Before → After)

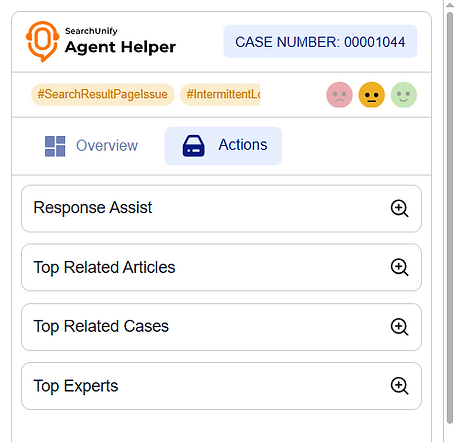

Before (Initial Design):

-

Tabs with nested cards for Response Assist, Related Articles, Related Cases, Experts.

-

Agents had to click through multiple levels to get context.

-

Felt like an “add-on” rather than part of the flow.

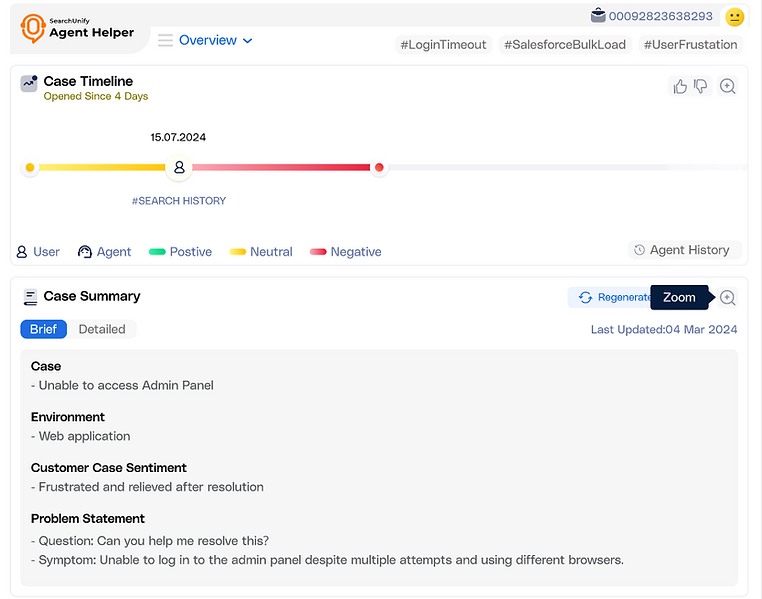

After (Redesigned Layout):

-

Case Timeline → visual snapshot of events + sentiment markers.

-

Case Summary (Brief vs Detailed) → quick-glance essentials with deeper context on demand.

-

Response Assist Inline Editor → AI-drafted responses with tone adjustment + 1-click copy.

-

Related Articles, Cases, Experts → integrated in a clean expandable format.

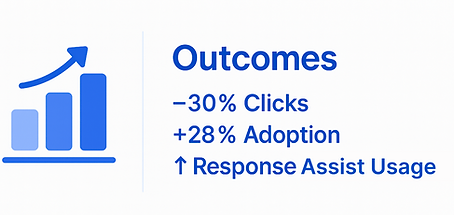

Outcome

-

–30% clicks for common actions (escalation, summarization).

-

+28% increase in Response Assist usage as a first-draft reply tool.

-

Agents reported it “felt part of the workflow” instead of an extra step.

-

PMs validated improved adoption → redesign became the default for all customers.

Learning

Adoption isn’t about more features — it’s about reducing resistance. This redesign worked because we focused on ease-of-use, collaborated with engineering to meet constraints, and integrated into agents’ real flow.